Project Statistics

Learn about project statistics in the super.AI platform.

Your project's statistics page provides an at-a-glance overview of how your project is progressing. You can use this information to determine whether any adjustments are necessary.The Statistics section of your super.AI dashboard provides metrics on your project’s progress, speed, and quality. Here’s how to get there:

Your project's statistics page provides an at-a-glance overview of how your project is progressing. You can use this information to determine whether any adjustments are necessary.The Statistics section of your super.AI dashboard provides metrics on your project’s progress, speed, and quality. Here’s how to get there:

- Open your super.AI dashboard

- Open the relevant project

- Click Statistics in the left-hand menu

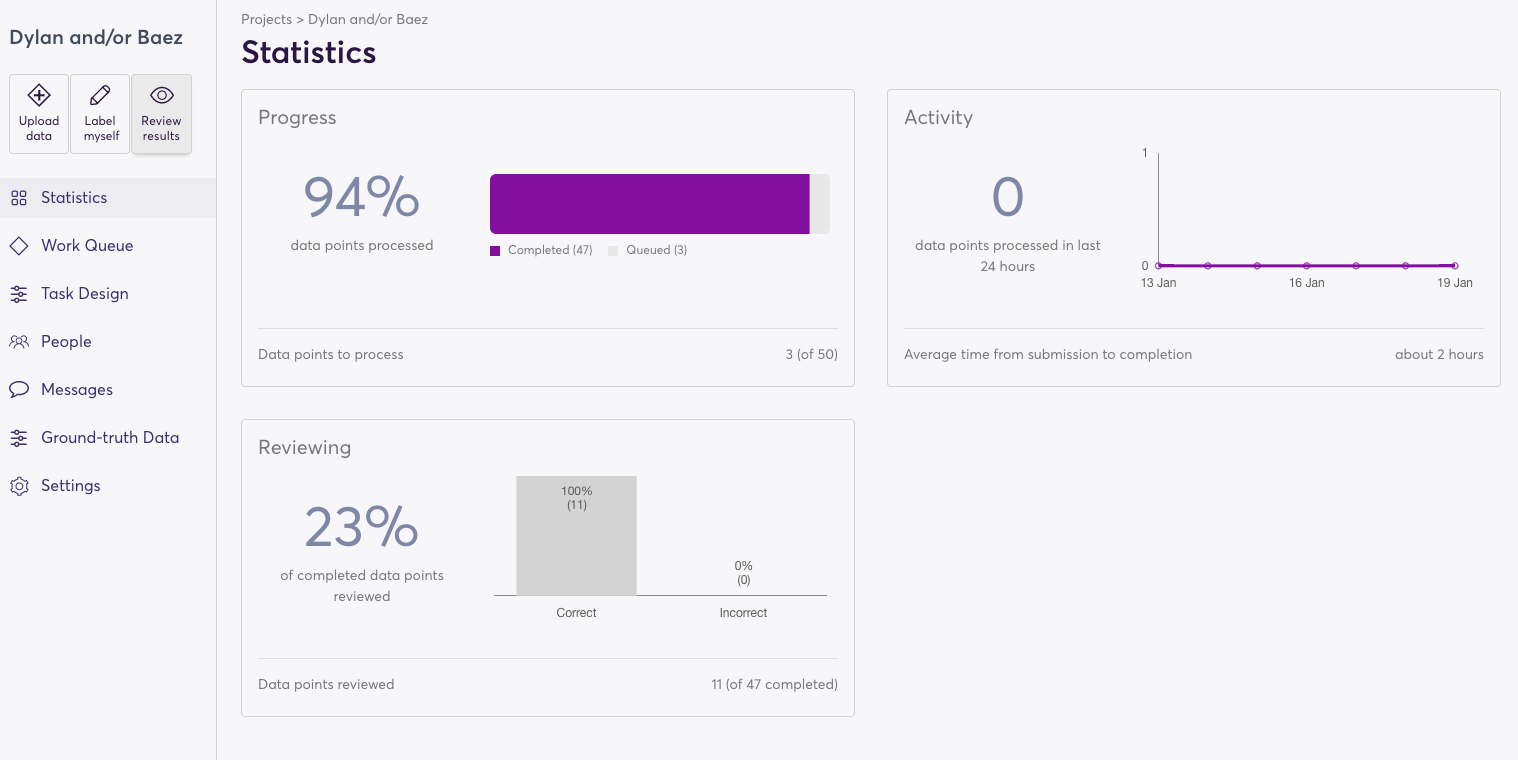

On this screen, you will see three sections: Progress, Activity, and Reviewing. You will also see Human vs AI if you have added an AI model and enabled active learning within your project.

Progress provides a high-level picture of how close your project is to completing all currently submitted data points. The meter shows how many data points in your project’s work queue are queued, processing, failed, paused, expired, or completed, along with the overall percentage of completed data points (this includes data points that expired and failed). Cancelled jobs are not included in these measurements. At the bottom of this section, you can also see how many data points remain incomplete as a number out of the total data points.

Activity shows the rate at which your project is processing data. The line graph displays how many data points have been completed on each day over the past week. On the left of the graph, you can see how many data points were completed in the last 24 hours. Below the graph, you can find the average time that passes between data point submission and completion.

Reviewing provides insight into your project’s quality. You can see how many and what percentage of completed data points have been reviewed as correct and incorrect by the project owner or any admins or reviewers. Reviewing data points also helps super.AI gradually improve the quality of your project’s output, as data points marked as correct during review become ground truth data. You can find out more about the reviewing process on our How to review outputs page.

Human vs AI shows you what percentage of your project's data points have been processed by humans and AI. This statistic is available when you have added an AI model and enabled active learning within your project.

Updated 9 months ago