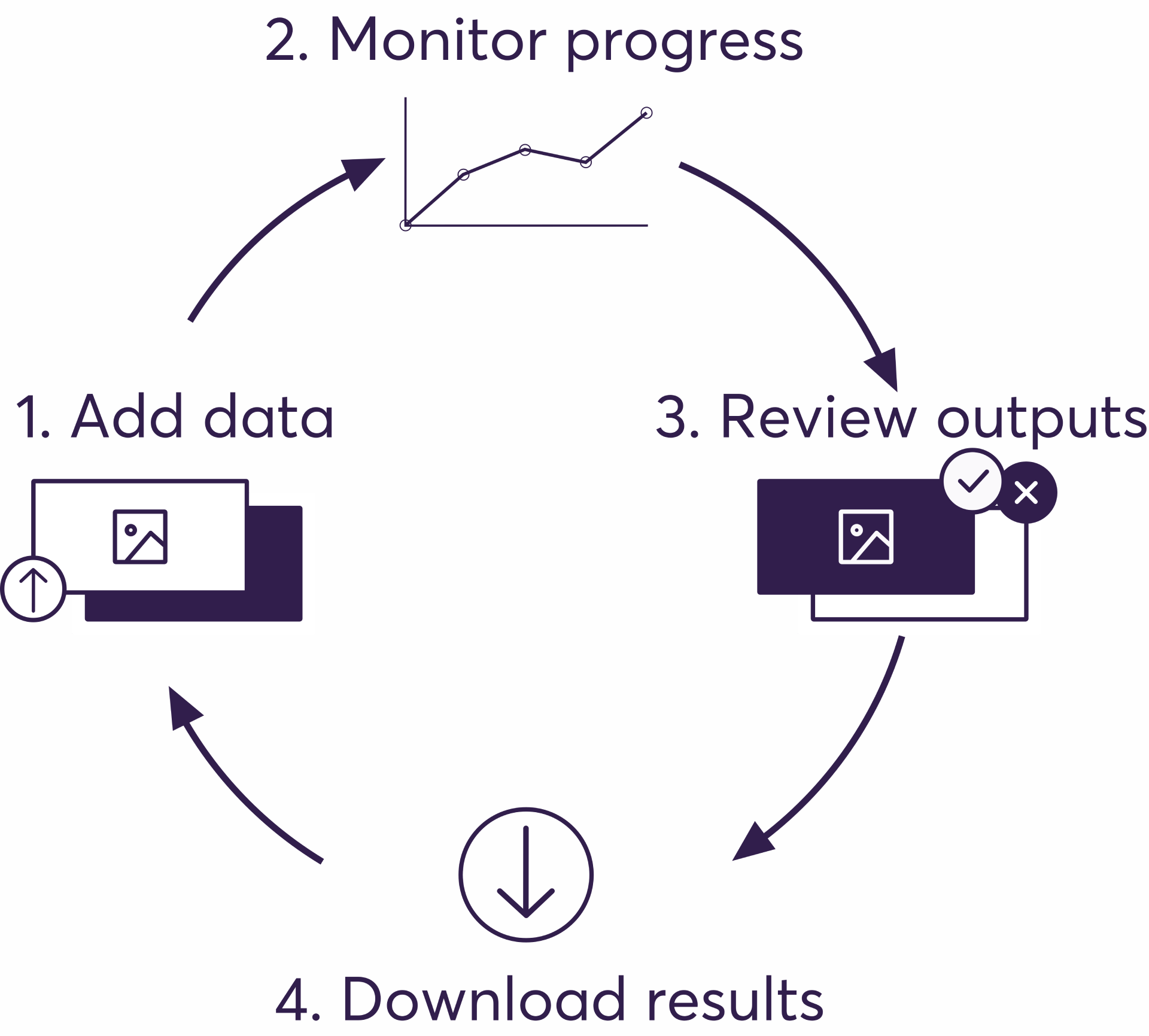

Super.AI enables a four-step data lifecycle that allows for continuous improvement to your project:

Below, we're going to walk you through how to make the most of your data in the super.AI system by following the data lifecycle steps. Throughout the post, we've linked extensively to our supporting documentation, where you can get a better sense of how to use our product.

Super.AI provides a variety of ways for you to add data points to your project for processing. Through the super.AI dashboard, you can upload files directly or easily add data in bulk by formatting your inputs in a JSON or CSV file. Our API also allows you to automate things using cURL, Python, or our command line interface (CLI).

Our free data storage allows you to securely store and access your files if you do not already have them hosted online.

You can quickly gain an overview of how your project is moving along by taking a look at its statistics page in the super.AI dashboard. Here, we provide a snapshot of overall progress, labeling activity, and output reviewing.

If you would like quality estimates when monitoring, you need to create ground truth data. This is data that super.AI knows to be correctly labeled.

Reviewing data point outputs is an essential aspect of quality control within your projects. All you need to do is mark outputs as correct or incorrect using the super.AI dashboard review tool (there’s also the option to edit and relabel those that are incorrect).

The benefits of reviewing outputs are twofold:

At any time, you can download individual results, batches of results, or grab all your project’s data at once. You can choose to receive your results in either JSON or CSV format. This allows you to further process the data externally or begin using the labeled output to train your own machine learning model.

.svg)